- AI LIMITATIONS

- ARTIFICIAL INTELLIGENCE

5 Things AI Still Can't Do: Exploring Limitations

Aug 19, 2025

-

Emilia Skonieczna

-

11 minutes

Everyone's talking about how smart AI is, but have you ever tried explaining what it feels like to be completely out of place in a new city where you don’t know anyone? Let's just say... empathy's not in the code. Sure, it might be polite and say all the right things, but when your story doesn't match the pattern, it doesn't really know what to say, so it just guesses based on what seemed to work before.

Every day, millions of people interact with artificial intelligence - whether through personalized recommendations, voice assistants or advanced automation tools. AI is now capable of tasks we used to consider purely science fiction. It can write, speak, create art and even diagnose diseases. And yet, despite its impressive capabilities, a sense of doubt remains.

So the question is... why do so many of us still hesitate to fully trust it?

Let's start with the basics: what is AI?

It isn't magic. It's just software that finds patterns in data and uses them to make predictions or decisions. It doesn't understand, think, or feel - it just calculates. It's designed to mimic human intelligence, and while it succeeds in some areas, it still falls short of many human skills.

Most of what we call artificial intelligence today is actually good pattern matching, powered by massive amounts of data and computing power. That's it.

But don't let that simplicity fool you. Artificial Intelligence is incredibly powerful - capable of transforming industries, reshaping the way we work and generating results that often seem extraordinary. Still, it remains a tool, not a mind.

One of the reasons we hesitate to trust AI is that it often gives the impression of understanding, but doesn't really understand. When it answers a question or writes a paragraph, it doesn't use conscious thought or awareness - it uses statistical patterns.

It mimics reasoning, but doesn't reason. This illusion can make the AI feel more intelligent than it really is, which leads to both overconfidence and skepticism.

Despite all the impressive things that AI can already do, there are still some major blind spots. It struggles with common sense reasoning, emotional intelligence, and ethical judgement. It can't truly understand context the way we can.

Another big limitation? AI has never lived in the real world. It learns from data, not experience, so when something unexpected or subtle comes up, it might sound confident... and still be completely wrong. That confidence can be misleading, especially when the situation calls for nuance or instinct.

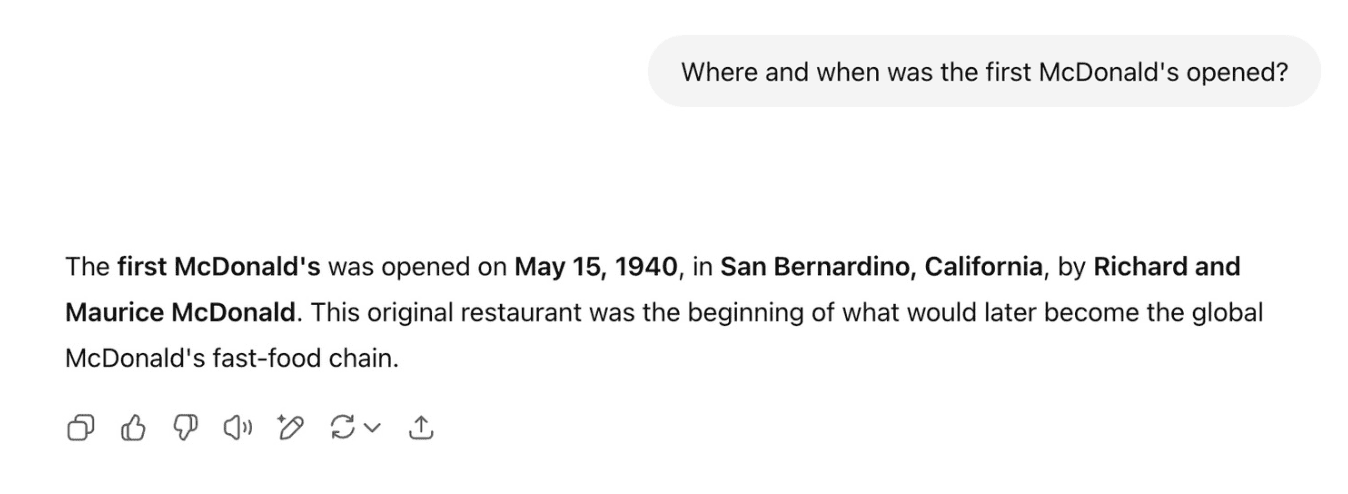

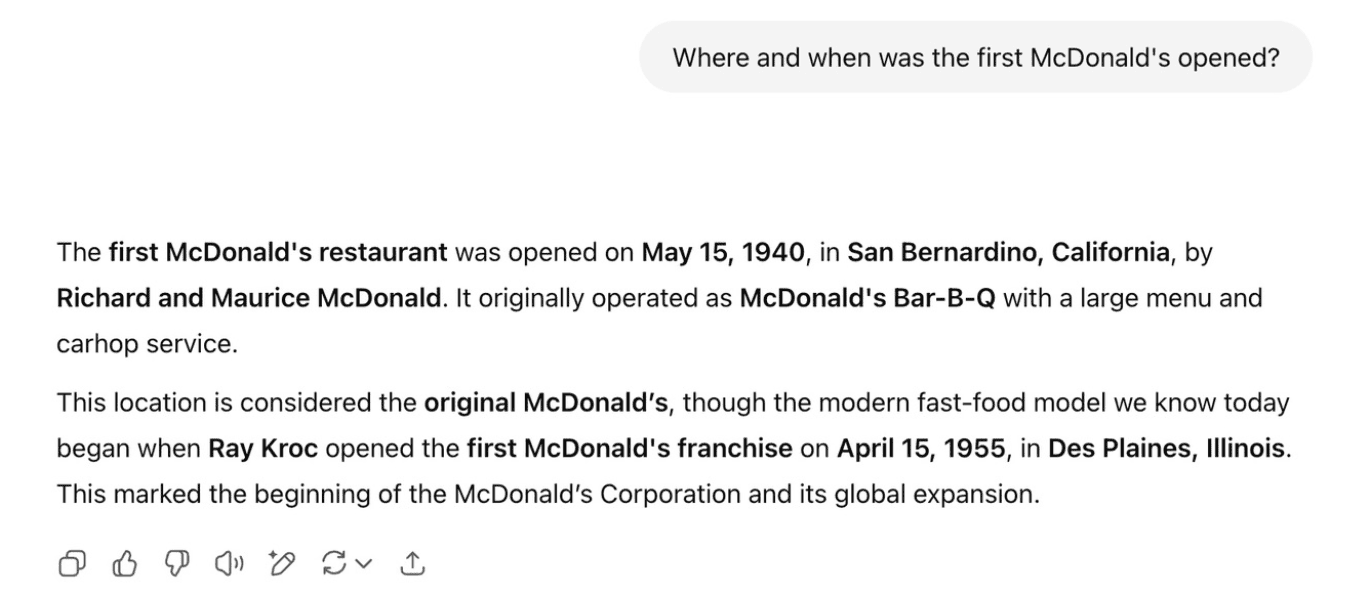

Same question, different answers - even the smartest AI can be inconsistent:

AI gives the impression of intelligence because it produces the right words at the right time. But it doesn't know why it says what it says. There's no thinking behind it, only human thought patterns. It mimics understanding. What looks like thinking is really just pattern matching, based on everything we've trained it on.

Ask AI a tough question, and it won't hesitate, even if it has no idea what it's talking about. That's the paradox. It doesn't know that it doesn't know. It doesn't check itself, because it can't. There's no doubt, no hesitation, no maybe. Just a straight-faced response that sounds good enough to believe.

The problem is, it sounds right, even when it's not.

AI can generate answers, but that's not the same as understanding. It doesn't process meaning, it makes statistical guesses. There is no intent, no emotional context, no sense of purpose, no awareness, no meaning behind the words. Whether it's giving advice, offering comfort, or trying to be funny - it's imitating, not empathizing.

The result? It answers with confidence, and zero comprehension. So... don't mistake the tone for the truth.

It feels like a conversation - and that's exactly the point. The phrasing, the rhythm, the tone... it's designed to sound human. But no matter how natural it seems, there's no real dialogue happening. Just the illusion of it. Behind every response is just code. No consciousness. No perspective. Just a machine reacting to text with more text. And yet, somehow, we keep forgetting that.

We bring the meaning. We project the connection. AI just generates language that fits. It's easy to forget that there's no mind behind the words - because they sound so much like ours. We hear confidence and assume understanding. But behind the words? Nothing. No awareness. No experience. Just patterns, probabilities, and predictions.

And yet, the illusion works - not because the machine understands us, but because we want to be understood. That's the real power: not what AI knows, but how easily we believe it might.

We rely on AI tools every day - often without thinking about what happens behind the scenes. But with every prompt, click, or voice command, we're handing over data. Lots of it. The question is: do we really know where it's going, who controls it, or how it's used?

One of the most fundamental limitations of AI is that we don't always know how it makes decisions. That's called the black box problem - where AI systems, especially those powered by machine learning, produce an output, but the reasoning behind it remains unclear. Even developers often struggle to trace how outputs are generated. The lack of transparency makes it hard to trust them.

A movie suggestion is easy to accept. But when it comes to medical decisions or loan approvals, the stakes are far higher. If we don’t understand how those decisions are made, how can we trust them?

Who owns the data AI learns from? Most of the time - not you.

Every search, click, or message, can quietly end up training a model. The problem? Consent isn't part of the deal. AI doesn't ask, it collects, categorizes, and learns, often from information you never meant to end up in someone else's model.

The line between private and public blurs with every interaction. Once it's in, you can't take it back. So before you give your data to a system, ask yourself - would you post it online?

AI doesn't have to think for itself to do damage. It just needs to be in the wrong hands. And that's exactly when it becomes dangerous - when people use it to spread fake news, scale up scams, or generate content designed to mislead.

The same tools that help you write a newsletter or automate customer service can be used to manipulate, impersonate, or exploit. And that's the darker side of AI's potential.

Without ethics, rules, and transparency, we're not just using AI - we're exposing ourselves to risks we barely understand.

We use AI every day, to analyze data, answer questions, even generate ideas. But there are still things AI can't do yet - at least not like humans.

Even with advanced image recognition and years of research and development, it lacks the human element. It may be faster than humans at some tasks, but when it comes to context, meaning, and instinct, the limits of AI are quite clear.

AI may sound intelligent, but don't expect it to quote like a pro. Ask for sources, and it can give you a mix of real studies and... fiction. That's not just inconvenient - it's risky, especially when we rely on it to support business, education, or other important fields.

The truth? AI doesn't check facts - it generates text. So when it gives you a source, there's no guarantee it's real.

Creativity definitely isn't one of AI's strengths. It can mix trends, copy styles, and imitate voices, but original ideas? Those still come from us.

Artificial Intelligence learns from what's already been done, so it follows existing patterns instead of coming up with something original. That's why it seems clever, but it's rarely surprising. AI can't create things it that hasn't seen before - because it can't imagine the unknown.

AI can help you choose what to buy, but you shouldn't trust it blindy - at least not always. If the model is trained on biased or branded data, its suggestions might lean more toward what sells than what fits.

That's one of the limitations of AI, it can't make independent decisions or question the data it's trained on.

My advice? Let AI show you the options, but you still have to be the one with the brain ;)

You can talk to an AI about grief, burnout, or a career crisis, and it will try its best to sound supportive, but that's not empathy. AI doesn't know what it means to feel uncertain, conflicted, or vulnerable. It doesn't understand - it responds. And that's the difference: real human interaction can't be faked, even by the best simulation.

Language is no longer a barrier for AI - at least not at first sight. It can summarize books, translate languages, and even write poetry. But still struggles with what's between the lines. AI can sometimes interpret subtle or sarcastic content, but just as often, it takes things literally.

Some tasks that require cultural context, irony, or subtle shift in tone are still challenging, because machine learning doesn't really understand them. At least not yet.

AI is changing everything. But instead of asking if it's smarter than us, maybe we should ask: what role do we want to play? Because while AI is great at generating a lot, it can't replace a human perspective.

AI shouldn't replace people. It should work with us - not instead of us.

Let's stop chasing full automation like it's the end goal - it's not. The real value comes from smart collaboration, where AI supports human work, not erases it. Because progress isn't about doing less.

It's about doing the right things, better.

If we don't understand how it works, we can't control what it does. That's why ethics, accountability, and transparency have to come first. Because when systems impact people, trust isn't optional. No more black boxes, no more hiding behind complexity.

If it's powerful, it has to be clear.

AI is impressive, but doesn't think like a human - and that's kind of the point.

We should be excited about what's possible, but without the right questions, we risk the kind of surprises no one wants.

Curiosity moves us forward. Caution keeps us safe. That's why understanding what AI can't do is just as important as knowing what it can.

So... trust it, but question it.

AI can do a lot, but it cannot understand what it means to be human. It doesn't understand, think, or feel. It's just calculating. That's why trust requires more than impressive results - it requires transparency, ethics and human oversight.

AI is a tool, not a mind. Use it wisely, question it often, and never forget: understanding is still a human privilege.

Discover how artificial intelligence is transforming the legal industry - and what it means for lawyers, clients, and the future of justice.

AI is changing our daily life, faster than we ever imagined. As it gets more powerful, it’s worth asking: has AI already changed the world more than we realize?

Most SaaS cloud problems start with early decisions. Cloud consulting helps you scale safely, control costs, and build resilient infrastructure from day one.