- AI

- DATA SCRAPING

- AI COMPANIES

Artificial Intelligence Companies and the Dark Side of Data Scraping

Aug 26, 2025

-

Emilia Skonieczna

-

7 minutes

Today's world is based on one thing: data. Especially as artificial intelligence advances every day. And as we know, behind every AI tool is a mountain of data - but where does it all come from?

With the development of artificial intelligence comes controversy over how that data is collected. From copyright issues to consent issues, the line between innovation and exploitation is becoming increasingly blurred.

Data Scraping is the process of collecting information, usually from websites, using tools like bots or web crawlers. Sounds harmless, but here's the catch - a lot of that data is collected without creators' knowledge or permission.

As AI systems become more advanced, a lot of people have questions about whether scraping activities cross ethical or legal boundaries - especially when it involves personal data or intellectual property.

The most important question is whether it is acceptable to use data someone shared online for an entirely different purpose, such as AI training, without their knowledge or consent. Many would say no.

People often have no idea that their work is being harvested and repurposed. The lack of transparency in how companies collect and use data has led to serious concerns. When personal blogs, artwork, or even medical forums end up in datasets without consent, it becomes clear why some people call this the dark side of AI.

So the real question isn't just whether we can use this data - it's whether we should.

Data scraping lives in a legal grey area. Technically, a lot of the data scraped by AI models is publicly available - but does that make it fair game? This is where things become unclear. Some platforms specify rules against scraping in their terms of service, but enforcing them is difficult, and many companies are still trying to push the boundaries.

Lawsuits are stacking up, especially as AI companies are being accused of crossing the line on intellectual property and violating data protection rules. The key battle is about consent and privacy. Just because the information is online doesn't automatically mean it can be collected, repurposed, and commercialized. Courts are now being asked to define what publicly available data really means, because availability doesn't always equal permission.

For AI companies, the stakes couldn't be higher. On the one hand, they need data to survive - on the other, collecting it in the wrong way can put their reputation at risk, cause legal problems, slow down progress, and even lead to unauthorized use.

As legal and ethical standards evolve, data decisions come with more that just technical consequences. Where data comes from, and how it's used, has become a reputational, legal, and strategic concern.

AI companies are under growing pressure to prove they collect and use data ethically, not just legally.

Training AI models requires data - lots of it. Most of it comes from the open web, including blogs, forums, articles, and code repositories. It's fast, it's cheap, and it's everywhere. But it's also controversial. Content creators and companies are pushing back, raising questions about consent, copyright, and control.

The result? A wave of lawsuits.

The legal pushback against AI companies is no longer theoretical - it's happening in courtrooms around the world. Some cases are already shaping the conversation around copyright and artificial intelligence. Others are just getting started.

Some of the biggest names in tech are in the spotlight:

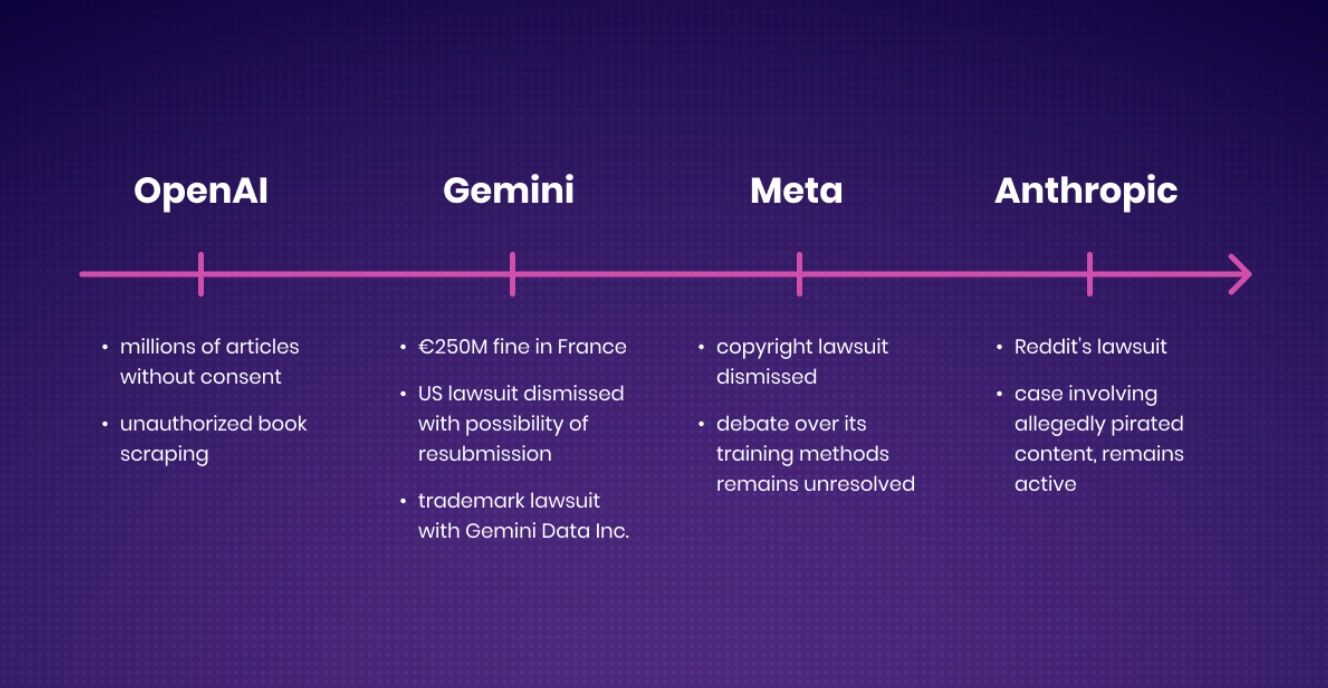

OpenAI is facing multiple lawsuits over how it trained its models. The most high-profile one comes from The New York Times, which accuses OpenAI and Microsoft of using millions of its articles without permission.

Moreover, authors such as Sarah Silverman and George R.R. have also taken legal action, claiming that their books were scraped and reused without permission. Some claims have been dismissed, but the copyright fight is far from over.

Google's Gemini isn't free from legal trouble either. In France, the company was fined €250 million for using news content from French publishers without proper disclosure.

In the U.S., a class-action lawsuit over data scraping was recently dismissed - but with permission to refile. On top of that, Google is also facing a trademark lawsuit from Gemini Data Inc.

The heart of the issue is familiar: what counts as fair use in the age of AI.

Meta recently won a round in court. A U.S. judge dismissed a copyright lawsuit filed by a group of authors over the company's Llama model. Meta may have won this case, but the broader debate over whether its training methods violate copyright law remains unresolved.

It's a win for now - but the questions aren't going away.

Even Anthropic, creator of Claude, hasn't escaped legal heat, despite keeping a lower profile. The company is part of broader lawsuits targeting generative AI companies for using protected works without consent. But recently, Anthropic secured one of the first court wins related to fair use.

Still, not all claims were thrown out. Another part of the same case, involving allegedly pirated content, remains active. Meanwhile, Reddit has filed a separate lawsuit accusing Anthropic of scraping its platform without permission.

OpenAI and Google are in the thick of it. Meta has just gotten out of one case - for now. Anthropic, despite winning, isn't out of the legal woods either.

But it's not just about content rights - it's also about data privacy. As AI companies collect massive amounts of data to train their systems, concerns about protecting data are turning into a serious test for privacy laws and responsible AI.

AI progress has reached a point where regulators are stepping in. New rules aim to limit unethical data practices, especially around web scraping, where standards remain unclear.

Governments are now facing one of the toughest challenges - staying open to innovation while protecting people's rights. Many legal systems weren't prepared for the speed and scale of AI innovation, and they're still catching up. The laws are coming, but enforcement is another story. In many ways, regulation remains a suggestion, not a boundary.

The EU AI Act is a milestone - the first major law that goes straight to the heart of AI: the data. It holds companies accountable for how they collect, use, and manage that data, especially when it's scraped from the web.

The law introduces a risk-based system that ranks AI tools based on how much harm they can cause - to people, to rights, to society. It also strengthens GDPR-style protections, with additional rules on biometric data, copyrighted content, and anything taken without consent.

You can think of it as the sharper, smarter sibling of GDPR - but it goes deeper into how that data is used.

Data might be the fuel behind AI - but not all of it is clean. With lawsuits piling up and regulators stepping in, one thing is clear: how companies collect and use data is no longer just a technical detail - it's a matter of ethics, legality, and trust.

In the rush to build smarter systems, AI companies have a choice: continue collecting data without consent, or rethink their approach before the courts - and the public - make the choice for them.

Did you know you can reduce business downtime with AI? See how tools from smart monitoring to predictive maintenance keep your business online.

Explore how AI transforms cybersecurity with intelligent threat detection, advanced tools, and smarter defense systems. Is your organization ready to keep up?

Discover how AIOps is transforming DevOps by integrating AI and ML to streamline operations, increase efficiency, and enhance decision-making for better outcomes.