- GAMEDEV

- INFRASTRUCTURE

- SCALABILITY

How to get scalable and cost-efficient infrastructure for multiplayer game

Oct 15, 2025

-

Emilia Skonieczna

-

10 minutes

What's one of the most important things in a multiplayer game? The answer is simple: infrastructure. Imagine your game suddenly gets thousands of players - and your servers can't handle it. Total chaos.

Someone's just about to win, it's the most intense moment... and the game crashes. The result? Frustration, disappointment, and players leaving your game. The worst possible outcome.

So, how can you build a scalable yet cost-efficient infrastructure? Here's the deal.

If you're building a multiplayer game, you need an infrastructure stack that can handle both real-time performance and long-term reliability. Beyond simple hosting, your systems should be able to manage things like:

running live sessions

keeping player data in sync

making sure the game stays stable even when the number of players goes up or down

Behind all of that, there are three things that matter most- speed, stability, and scalability. What makes the difference is how well your architecture keeps them in balance.

In most cases, a multiplayer setup includes several key services working together.

The game server hosts the authoritative logic, which ensures consistent rules and outcomes across all players.

The matchmaking backend connects users based on skill or region.

Lobby and player state management systems maintain session continuity.

Data persistence components store user progress and session data.

Analytics and telemetry tools monitor performance, engagement, and network health, with lightweight event logging capturing in-game data without adding latency.

How you combine them really depends on the game itself - but together, they form the core of a flexible, cost-efficient infrastructure stack that can evolve along with the game's scale.

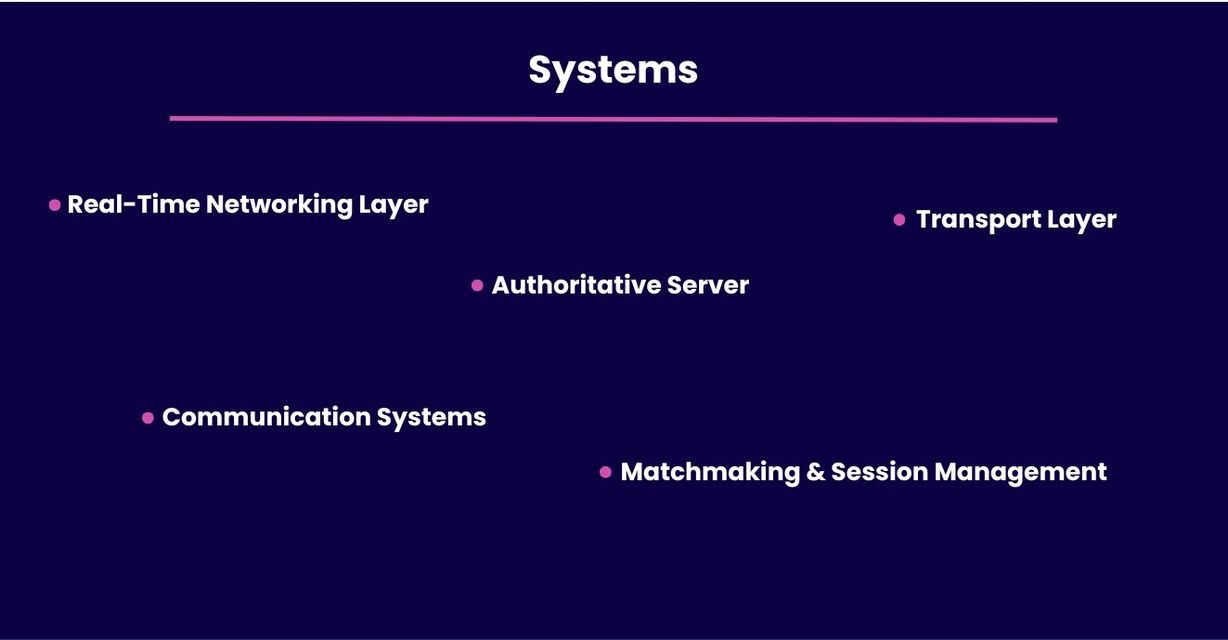

At the heart of moment-to-moment gameplay is the real-time networking layer, usually powered by UDP or WebSockets - which enables instant player actions and feedback.

Meanwhile, the authoritative server manages the true state of the world. It distributes updates to clients through replication and state synchronization mechanisms.

All that data flows through a dedicated transport layer. It's what keeps latency low and synchronization reliable.

Matchmaking and session management connect players to the right game instance.

Lastly, communication systems - like voice and chat - provide real-time interaction over low latency channels.

The result? Smooth player experience even under heavy traffic.

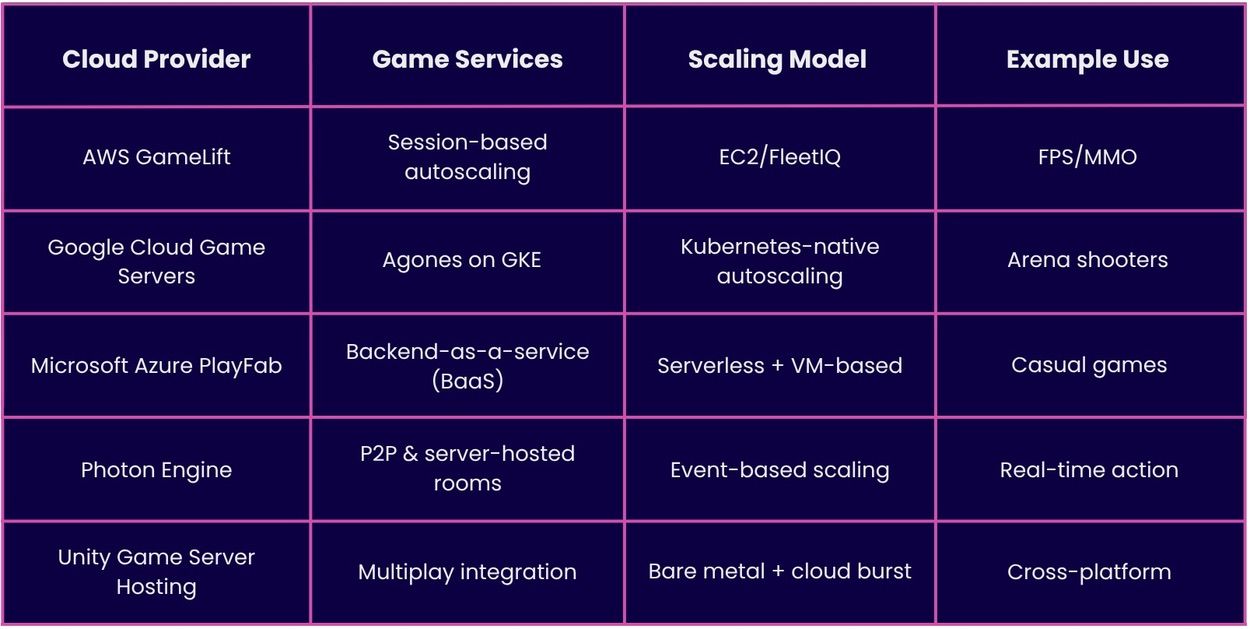

When you're choosing a cloud for a multiplayer game, you're really selecting the infrastructure that will allow you to grow from small to large scale. The strongest options are usually the big three cloud providers along with specialist hosts that focus on multiplayer traffic patterns.

Platforms like AWS, Google Cloud, and Microsoft Azure stand out because they combine elastic compute services with advanced networking and fully managed data layers.

For many teams, "the best" means a platform that allows developers to iterate quickly, but also keep costs predictable and latency low.

If you need deep control over dedicated servers and fleets - AWS's fleet management stands out.

If you favor container workflows and Kubernetes-first operations - Google's GKE fits well.

If you prefer managed backends and built-in identity services - Azure's BaaS ecosystem is appealing.

Specialized providers like Photon or Unity Hosting are great choices when you want to ship quickly using prebuilt backends and scalable matchmaking.

Cloud platforms can handle unpredictable peaks through horizontal load balancing, multi-regional zones, and fast rollouts of images and containers. This allows your authoritative server to scale within minutes.

Behind all of that, autoscaling policies react to queue depth, CPU usage, and session metrics. Traffic managers direct players to the nearest region, reducing latency compared to a single-region setup. Content delivery networks take over static assets, so the client and server can focus on critical game logic and efficient data sync.

If you do it right, the whole setup can handle large numbers of players without breaking a sweat - and still deliver a smooth, consistent gameplay experience.

Top Providers:

Cost control in multiplayer infrastructure comes down to matching capacity with player demand, but still keeping performance targets consistent. That means using scalability features that react quickly and implementing safeguards that prevent runaway spending.

Use spot or preemptible instances for non-critical workloads like analytics, build pipelines, or overflow match hosts - they reduce compute costs significantly. Pair them with auto-scaling groups to maintain resilience when interruptions occur.

Autoscale game servers based on player demand to pay only for the capacity you need. Align scaling policies with average session length and queue times to handle player surges efficiently.

Separate regional servers across multiple regional zones to reduce cross-traffic and data transfer costs by placing shards closer to players for lower latency and cheaper interconnects.

Schedule server spin-downs during low-traffic hours so idle fleets do not waste budget overnight or between events.

Use hybrid models - a mix of cloud and bare metal for predictable peaks. This combines elasticity with usage-based billing to keep costs stable during large-scale launches or seasonal spikes.

These strategies keep cost optimization aligned with uptime and latency goals, ensuring players get a smooth experience without overspending.

Maintaining high performance requires careful planning.

Firstly, separate regional servers across multiple regional zones to minimize cross-region traffic and reduce latency for players by serving requests closer to their physical location. Then, combine this setup with auto-scaling groups that adjust capacity based on player concurrency, so that peak demand doesn't affect uptime.

Additionally, use spot instances for background or non-critical services to save money without compromising core performance.

Together, this minimizes costs while sustaining high uptime, low latency, and consistent performance across global multiplayer sessions.

High concurrency isn't about one framework that does magic things, but about an overall architecture that minimizes contention, scales horizontally, and keeps the game state authoritative. Architectural patterns that support this include:

Distributed state management (Redis/Memcached) - to handle shared data and reduce database contention

Microservices for game logic and authentication - to let stateless services autoscale independently

Pub/Sub messaging for event broadcasting - to decouple producers from consumers and keep fanout scalable

Load-balanced session management - to distribute connections across shards and regions

Eventual consistency in non-critical features - to keep the hot path fast while background tasks sync safely

Use them right, and you've got the foundation of a high-concurrency design.

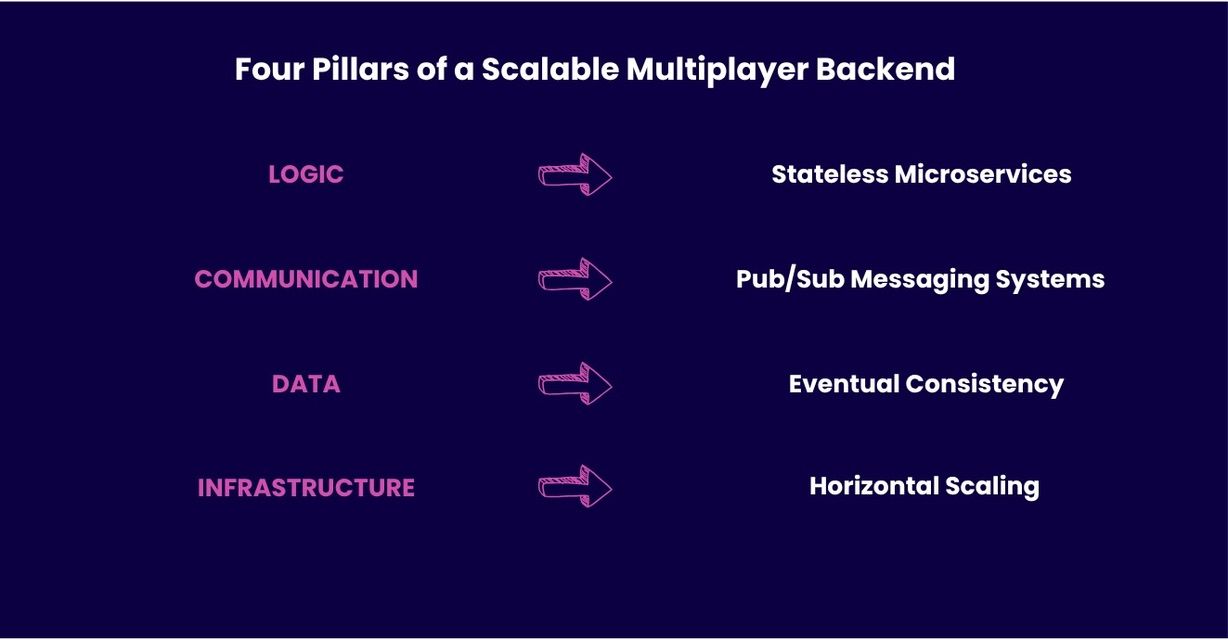

A game must handle thousands of simultaneous sessions - and what determines how gracefully it scales? Backend design.

Let's start with stateless microservices. They work best when critical game logic is kept close to authoritative hosts, while side features (like cosmetics or player profiles) run separately.

Moving on to communication - Pub/Sub messaging systems, like Kafka or NATS, handle match updates, chat, and telemetry without blocking gameplay. They decouple producers from consumers so the system doesn't slow down while sending updates to many clients.

Non-critical systems can rely on eventual consistency, allowing stores, progression, and side features to update asynchronously without interrupting gameplay.

Now, let's talk about infrastructure. Scaling horizontally across load balancers, containers, or regional shards keeps performance predictable as concurrency grows. Whether it's Redis, Kafka, or stateless services, the goal remains the same - to maintain a scalable, cost-efficient backend that keeps latency low and fairness intact for every player.

Be honest - what's worse: building a great game that breaks under load, or never reaching the players who could've loved it?

If you don't want to end up in either situation, great - then you need Kubernetes (K8s).

It provides the foundation for hosting containerized game servers that can grow with player demand. By using Helm charts and container registries, game developers can deploy fleets of dedicated servers quickly and keep updates consistent across all regions.

With node pools and auto-provisioning, you can spin up compute resources on demand - reducing idle capacity and overall server costs.

Here's how you can get started:

Containerize your game server.

Define deployments and services.

Integrate with Agones.

Automate updates and monitoring.

Once this setup is in place, Kubernetes handles the rest - scaling your servers with demand while keeping latency low and costs predictable.

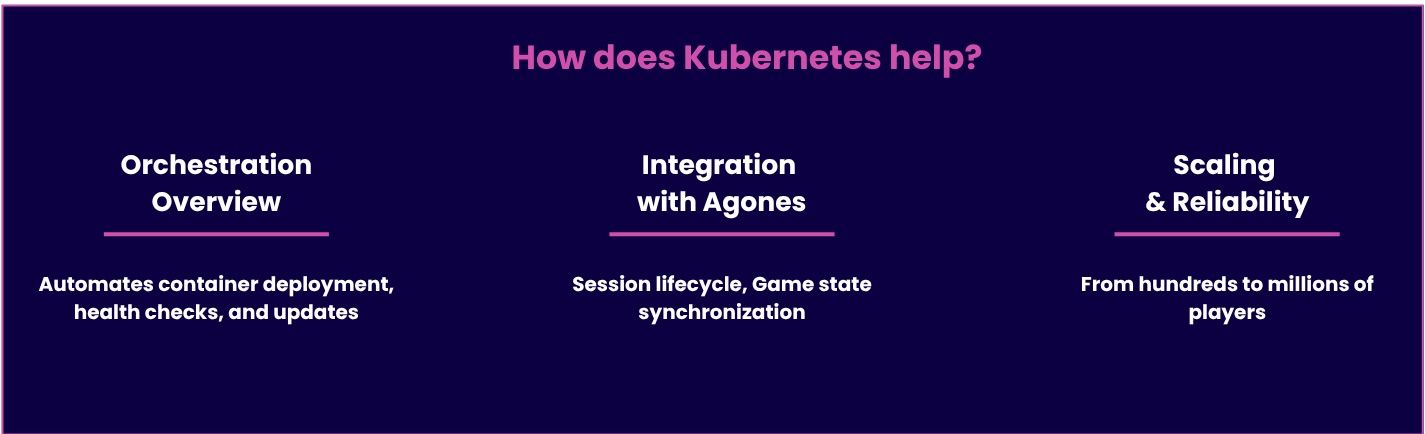

But why exactly should you choose Kubernetes? Let me explain.

It has become a standard for multiplayer backends because of its combination of reliability and flexibility. Multiplayer games require fast reaction to variable player loads and low network latency - something Kubernetes achieves through region-aware scheduling and intelligent load balancing.

K8s also integrates with Agones, an open-source framework that manages the full session lifecycle, from player connection to graceful shutdown.

Together, they create a scalable matchmaking layer that ensures a consistent game experience, even when handling thousands of concurrent sessions.

In large-scale environments, orchestration is what keeps the client and server ecosystem stable. Kubernetes automates health checks, rolling updates, and container restarts without disrupting live matches. Thanks to integration with Agones, developers can coordinate the session lifecycle and maintain the authoritative game state across nodes.

This orchestration model allows the game backend to handle large numbers of players while maintaining efficient data routing and minimal lag.

By prioritizing scalability and reliability, Kubernetes helps teams create scalable infrastructure that keeps gameplay smooth for millions of players worldwide.

Even with the right architecture and orchestration, small details can make a big difference, so keep these things in mind:

Separate user authentication from gameplay - isolating login and identity services prevents authentication spikes from affecting live matches.

Track in-game events with low-overhead logging - lightweight event tracking helps monitor performance without increasing latency.

Set server lifecycle limits to free unused capacity - automatically terminate idle resources to keep infrastructure efficient and cost-effective.

Improvements like these can make a big difference in stability and cost control when your game starts scaling to thousands - or even millions - of concurrent players.

Is your Shopify app ready for thousands of users? Discover key scalability tactics.

Discover the three key attributes that define DevOps! Explore how automation, productivity, and a transformative approach enhance software releases and best practices.

Discover best practices for DevOps testing in this comprehensive guide. Learn how to automate testing with Azure's tools to enhance performance and streamline your strategy.