- PREDICTIVE PLANNING

- FORECASTING

- DATA SCIENCE

How to Build a Solution With Predictive Models for Planning

Nov 6, 2025

-

Emilia Skonieczna

-

18 minutes

When you think about planning, what comes to your mind?

For decades, it meant relying on knowledge, experience, and a touch of intuition.

Now imagine it as a chess match - you can't win by thinking only one move ahead. Predictive models let you plan ten moves ahead.

That's the theory - but how does it work in practice?

Predictive planning uses data, statistical models, and machine learning to forecast what's likely to happen next - whether it's sales, demand, or resource needs.

Instead of reacting to change, organizations leverage predictive analytics to anticipate it.

In other words, predictive planning turns uncertainty into insight.

It gives teams confidence - when you mix human expertise with the capabilities of predictive models, you get a formula for planning with fewer - or even no - assumptions.

Predictive planning is a combination of traditional planning processes and predictive analytics models. It analyzes historical and real-time data to forecast future outcomes.

Although predictive planning relies on past and current data, the goal isn't to repeat it - it's to recognize patterns and trends that reveal what's likely to happen next.

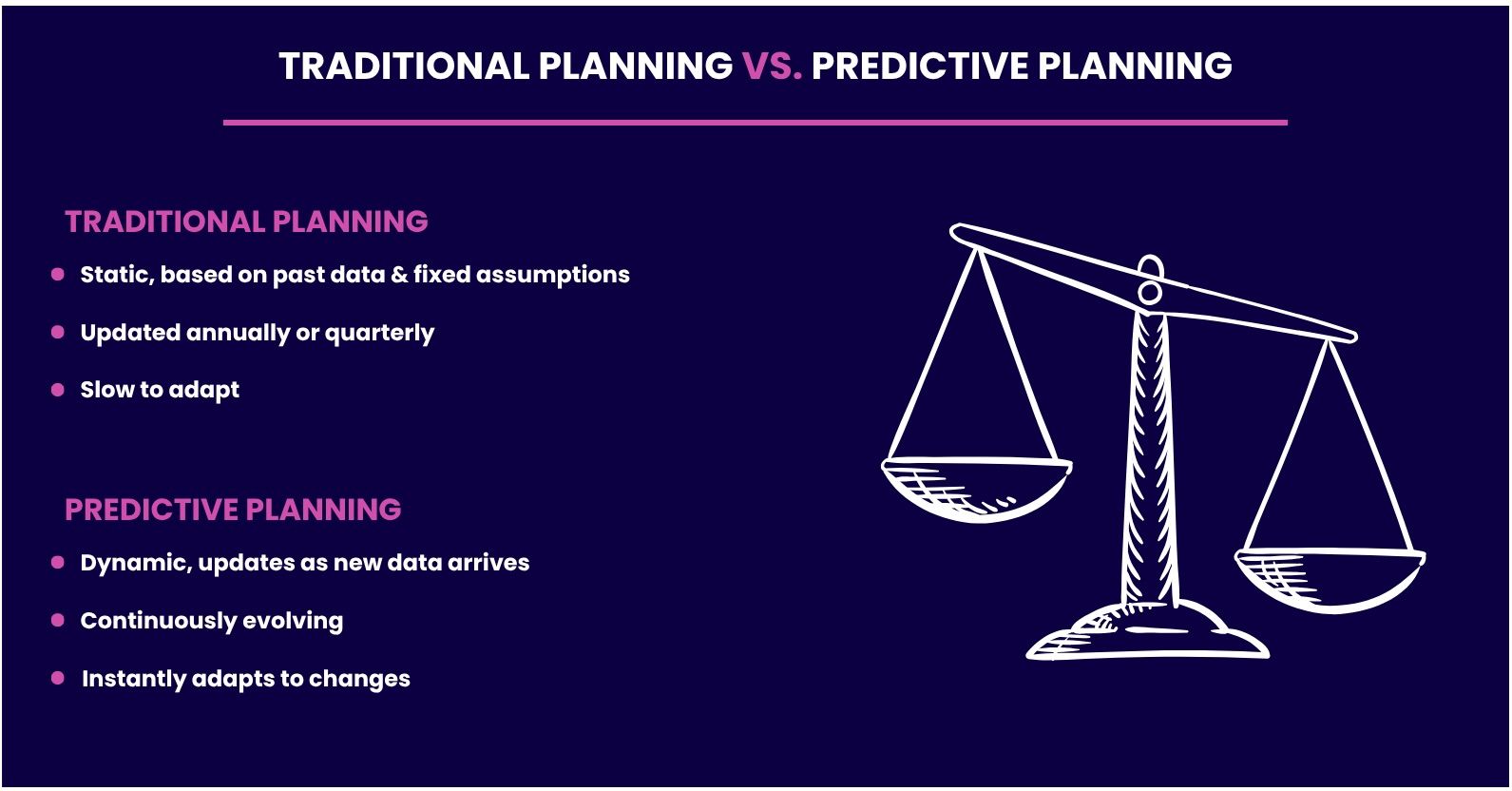

The main difference between traditional and predictive planning lies in their nature.

Traditional planning is static - it relies on past data and fixed assumptions. Predictive planning works differently - it's dynamic and adjusts as new data arrives.

Traditional models are usually built once a year, maybe once a quarter. Predictive ones evolve continuously.

Something big happens? It's immediately reflected in the model and the forecast.

A sudden change in demand or a new market trend? The system adapts instantly.

Being able to react to what's happening around the world immediately sounds quite important, doesn't it?

Predictive planning improves accuracy by uncovering patterns that are invisible to human analysts. It also makes scenario analysis easier - you can test "what if" situations faster and more accurately, without manual calculations or assumptions.

Because you can forecast future needs, you can allocate budgets, labor, or inventory with laser precision - which means you have a clear direction for your actions.

Predictive planning is now used in nearly every industry.

Supply Chain:

Supply chain teams use predictive models to forecast demand, optimize inventory, and anticipate shipping delays. For example, a retailer may combine historical sales data with weather forecasts and holiday patterns to predict when certain items will spike in popularity. Manufacturers apply similar predictive techniques to schedule production and avoid overstocking or shortages.

Finance:

Finance teams use it to analyze cash flow patterns, customer payment behavior, and market risk. CFOs rely on them to forecast revenue, identify when liquidity might tighten, and detect early signals of credit default or market fluctuations before they become major problems.

Workforce planning:

HR departments predict hiring needs, employee turnover, and training requirements. For example, seasonal businesses can plan ahead when additional staff will be needed, or which roles are likely to face shortages based on past attrition trends.

Marketing:

Marketing teams use predictive analytics to forecast customer behavior - from purchase likelihood to the risk of churn. By analyzing engagement data, campaign performance, and external factors like seasonality, teams can identify which segments are most likely to respond best to specific offers.

Of course, many of these analyses can be performed manually, but predictive modeling can process large datasets, detect patterns faster, and with greater accuracy. It has become a core capability of modern planning.

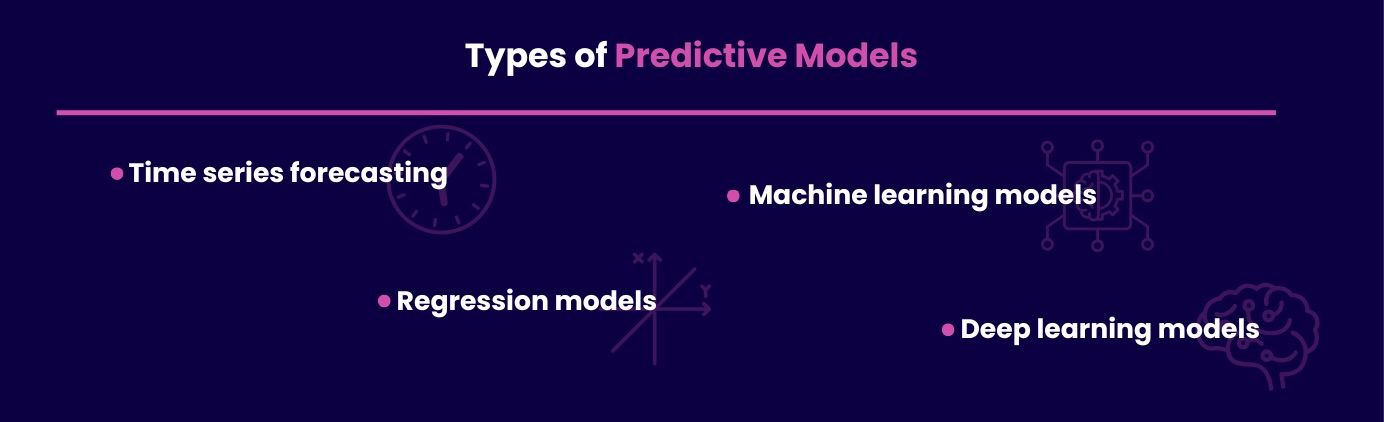

There's no such thing as a one-size-fits-all predictive model. The choice depends on your data, goals, and level of technical maturity. Below is a quick tour through the most common types of models - from statistical classics to modern AI ones.

Models that analyze data over time - like sales per month or daily website traffic - help detect patterns and trends.

Among the most commonly used approaches are ARIMA and Prophet, both designed to forecast future values based on historical data.

ARIMA (Auto Regressive Integrated Moving Average) is one of the old-school classics, great for predictable patterns.

Prophet, developed by Meta, handles seasonality and missing data better, making it ideal for business forecasting where trends and cycles influence decisions.

Regression models find relationships between variables - for example, how pricing, marketing spend, and economic indicators affect sales. Linear regression is simple and interpretable, while Ridge and Lasso regularize the model to avoid overfitting. They're perfect for planners who need transparency and reliable directional insights.

Linear regression works by fitting a straight line that captures how inputs relate to an outcome.

Ridge slightly reduces the impact of all variables.

Lasso can eliminate the least useful variables altogether.

Machine learning models like Random Forest and XGBoost take forecasting to the next level by identifying complex, nonlinear relationships in data. They handle messy datasets, capture interactions between variables, and process large feature sets with ease. Although they're less interpretable, these models often deliver higher accuracy, which makes them useful when accuracy matters more than interpretability.

Random Forest builds many independent decision trees and averages their predictions, which helps reduce overfitting.

XGBoost builds trees sequentially - each one learning from the previous one's errors - which makes it faster and often even more accurate.

When your data involves time-dependent sequences, like energy demand, sensor data, or financial transactions, deep learning predictive models like RNNs (Recurrent Neural Networks) and LSTMs (Long Short-Term Memory networks) excel. They analyze historical patterns over time, making them highly effective for detecting seasonality, anomalies, and emerging trends.

RNNs capture short-term dependencies in sequential data.

LSTMs extend the capability of capturing dependencies by remembering information over longer sequences.

If we put them side by side:

regression models are the simplest and easiest to explain

time series models are reliable for data with consistent patterns over time

machine learning models offer a balance between flexibility and performance

deep learning models deliver unmatched accuracy - but at the cost of complexity

The right choice depends on your data's maturity and business goals.

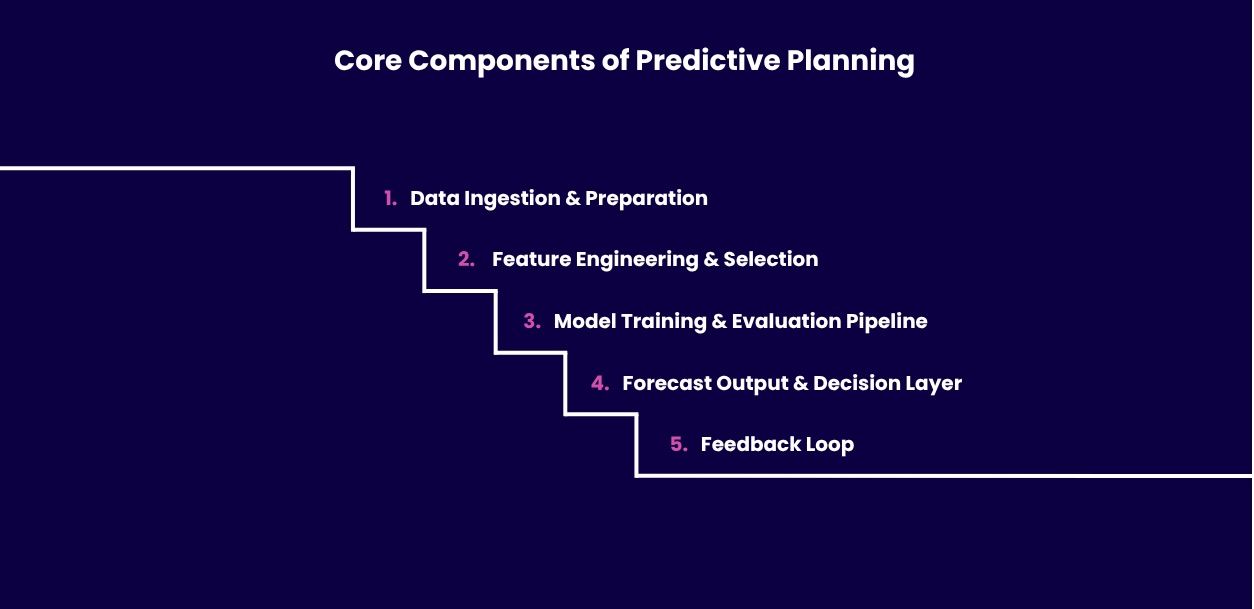

Building a predictive planning system isn't just about plugging data into a fancy model. It's an ecosystem - data pipelines, models and algorithms, feedback loops, and business integration all working together. To understand how it all works, let's look at the key building blocks.

This is where it all begins: collecting, cleaning, and structuring raw data. Data might come from ERP systems, sensors, APIs, or spreadsheets. The goal is to ensure it's accurate, complete, and consistent before it reaches your model. You can compare it to cooking - bad data means a bad meal.

Features are the DNA of your model - the variables that have predictive value. Feature engineering is the process of transforming raw data into meaningful information - like changing timestamps into weekdays or temperatures into heatwave indicators.

Models require high-quality and well-defined features, which is why smart feature selection removes noise and keeps only what matters most to the forecast.

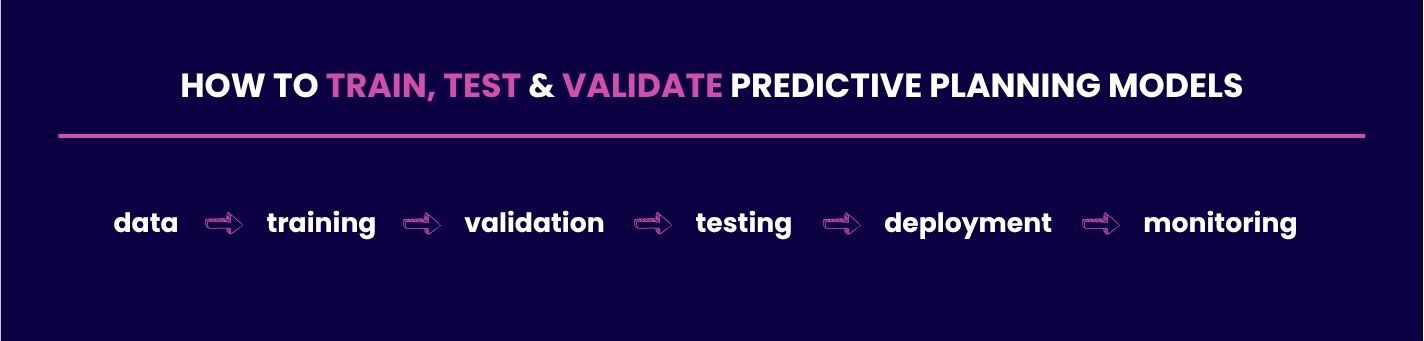

At the core of predictive planning lies a continuous learning loop. Models are trained on historical data, tested on new data, and evaluated by how accurately they predict future outcomes. The pipeline automates this cycle to ensure consistency and scalability.

So... train, test, refine, repeat.

Once the model delivers its results, the real value begins to show. The forecasts are visualized in interactive dashboards, integrated into planning tools, or translated into automated actions.

But what's the goal? It's not just to predict what's next - it's to make better and faster decisions.

Even the best predictive models don't stay perfect forever. Market conditions, customer behaviors, and data patterns change. A feedback loop, where new results are fed back into the system, allows continuous learning through predictive algorithms that adjust to future trends and keep the model reliable over time.

It's like giving your model regular tune-ups so it doesn't go stale.

Data is the lifeblood of predictive planning. The quality of your input determines how reliable your predictions will be - no exceptions. Every forecast starts with analyzing historical and current data.

This is how to do it in the right way.

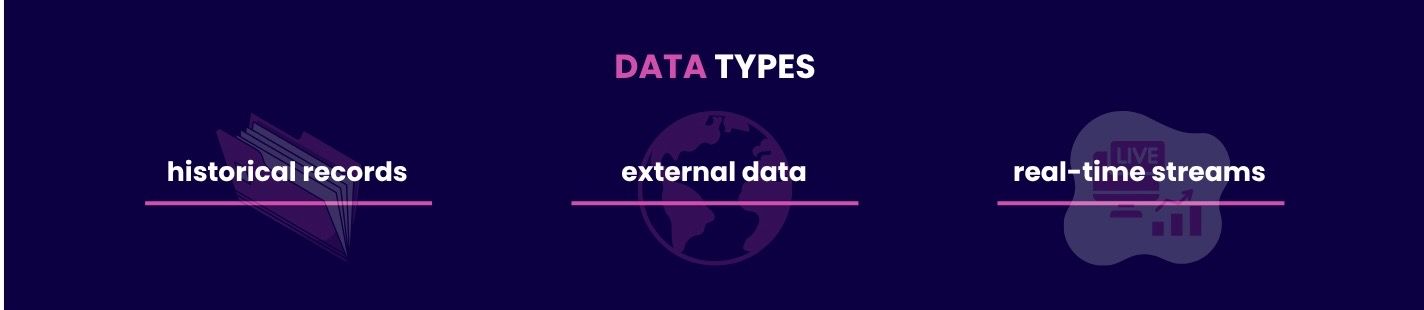

Predictive planning relies on data variety - each type has a different role in the forecast.

Historical records are your foundation. They include sales transactions, production logs, staffing levels, or financial statements collected over months or years. By analyzing them, you can uncover patterns, cycles, and seasonality - for example, how costs fluctuate each quarter.

External data adds the context that internal systems can't capture on their own. Market data, macroeconomic indicators, weather forecasts, competitor pricing, or even social sentiment can all improve prediction accuracy. A good example is a beverage company using temperature forecasts to predict ice cream or cold drink sales.

Real-time streams keep the model aware of what’s happening right now. These can include IoT sensor readings, live website traffic, POS transactions, or logistics feeds. For example, a supply chain planner might monitor GPS data to adjust delivery schedules instantly.

The best results come from combining all three data types. Together, they give you a full picture of what's happening - and what's about to happen.

High-quality data is consistent, complete, timely, and relevant. Inaccurate timestamps or inconsistent measurements can affect predictions. Many teams use automated validation checks to catch errors early.

Remember: a model is only as smart as the data used to develop it.

Missing values and outliers may seem minor, but they can break the entire analysis. They're small, but dangerous.

Fortunately, there are ways to handle them. Techniques like interpolation, imputation, or trimming help keep the dataset clean and consistent.

But there's a fine line between caution and overcorrecting - don't remove real anomalies that might reveal important trends.

Building a predictive model is not only about feeding data - it's also about preparing that data in a way the model can truly understand. Different features often operate on different scales.

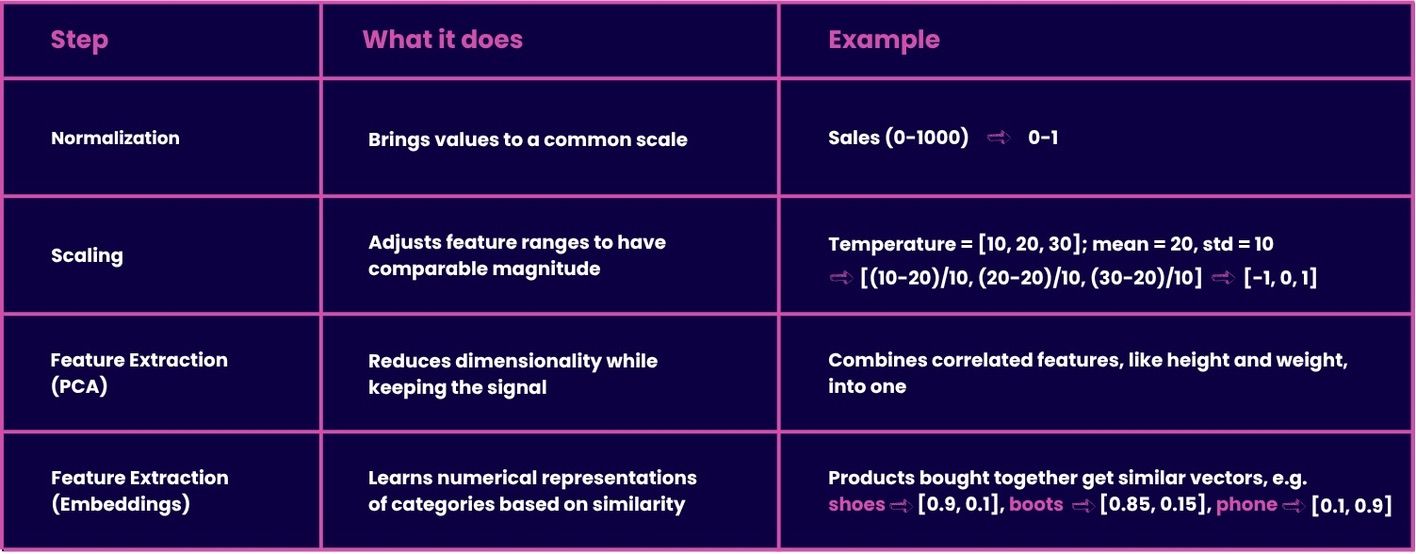

Normalization and scaling bring features to comparable ranges to make it easier for models to understand relationships. Feature extraction techniques, like PCA or embeddings, take it a step further by reducing complexity without weakening the signal strength.

You want to achieve a high level of accuracy? Then this step is necessary.

Generic data gets you generic results. Domain expertise helps identify the subtle but valuable variables - like promotion frequency in retail or weather delays in logistics. They often reveal relationships that purely statistical models might overlook.

The secret ingredient? Pairing data scientists with subject-matter experts.

Choosing a model is about selecting the one that best fits your goals, data, and resources. Don't focus on complexity - focus on what actually meets your needs.

If you're predicting quantities - like sales or demand - go for forecasting models. If you're categorizing outcomes - like ''will churn" or "won't churn" - choose classification models.

Forecasting models estimate how much or when something will happen, while classification models identify the category of each observation.

Always start by defining the question, not the algorithm.

Big datasets with long historical depth work best for machine learning or deep learning models. On the other hand, smaller datasets might perform best with time series or regression methods.

The granularity (hourly, daily, monthly) also affects model choice - finer intervals need models that can handle high-frequency noise.

High-performing models like neural networks often act as black boxes, while simpler ones like linear regression offer clear explanations. Regulated industries like finance and healthcare often value interpretability as much as, or even more than, accuracy - because every decision must be explainable and auditable.

The art lies in finding your sweet spot between explainability and the desired level of accuracy.

Python and R are the go-to languages for data modeling. AutoML tools - like Google Vertex AI or DataRobot - help non-technical people build reliable models fast.

Cloud-native ML platforms integrate data pipelines, training, and deployment to make predictive planning scalable and less painful for IT teams.

Ensemble models combine multiple learning algorithms to improve performance. Think of them as a team rather than a single player. Hybrid models can merge statistical forecasting with machine learning to balance stability and flexibility. When accuracy is crucial, ensembles are your best choice.

Model training is where ideas meet real data. It's the step that makes sure your model doesn't just work on the past, but also handles new data well.

Data is usually split into three parts:

training - to teach the model

validation - to tune it

testing - to evaluate final performance

This keeps results honest - no testing on what the model already knows.

Metrics show how good your model really is. MAE and RMSE measure average errors, MAPE puts them in percentages, and R² shows how much of the variation the model explains.

The right metric depends on your use case - not all errors are created equal.

Overfitting happens when a model memorizes the data instead of actually learning from it. Techniques like regularization, cross-validation, and pruning help prevent it.

Data leakage is another common issue. It happens when future or extra information sneaks into training, which makes the results look better than they really are. If you catch this early, you'll avoid an unwanted surprise.

Even great models lose accuracy over time when data patterns change - that's model drift.

But there are ways to deal with it:

regular monitoring and retraining - help catch it early

smart systems alert teams before drift becomes damage

Early detection means fewer surprises.

Technology is the foundation of modern predictive planning. If you choose it wisely, you'll be efficient, not overwhelmed.

Here are some of the key tools and platforms that make predictive planning possible.

Choosing the right programming language makes all the difference. These two are the go-to choices in predictive planning:

Python dominates the field thanks to libraries like pandas, NumPy, and scikit-learn.

R remains a favorite for statistical analysis and visualization.

Both are open-source, widely used, and proven across industries.

Azure ML, Google Vertex AI, and AWS SageMaker manage the core processes - data pipelines, training environments, and deployment.

They scale automatically, integrate with enterprise data sources, and support version control throughout the whole development of the model.

They are perfect for teams moving from prototype to production.

Not every team needs to code models from scratch. APIs like Facebook Prophet and Amazon Forecast offer advanced forecasting engines with minimal setup. They're great for accelerating projects without deep ML expertise.

Building models is only half the job - keeping them running reliably is the other half. Tools like Apache Airflow and dbt orchestrate data workflows, while MLflow tracks model versions and metrics.

And yes, our team can help you integrate all of these seamlessly, so you can focus on planning, not plumbing.

Predictive planning offers huge value, but it also comes with potential risks. Below are the main challenges you should keep in mind.

If your data reflects bias - human, geographic, or historical - your model will too.

Limited or one-sided data can cause similar problems, which leads to gaps in predictions and performance.

Make sure to diversify your data and keep it representative - that's how you reduce the bias.

Remember: models don't create bias, they inherit it.

In sectors like finance, insurance, or healthcare, decisions must be explainable. Complex black-box models can be hard to justify. For example, a bank can't simply deny a loan application because "the model said so". It has to explain what influenced that decision.

Tools like SHAP or LIME can help translate model reasoning into human language - it's a must for compliance and trust.

Planning in real-time, like logistics routing, demands ultra-low latency. Data must be processed and delivered instantly. When something is delayed even slightly, predictions can become inaccurate.

If you want to reduce latency, you should optimize pipelines and use edge computing - it can help, but it's a technical challenge worth planning for early.

Automation should support, not replace, human judgment. Every decision and every output should always be reviewed - especially in strategic or high-stakes areas, like ethics or healthcare.

The best systems mix machine precision with human expertise.

Predictive systems can become expensive if they're not architected well. Cloud costs, data storage, and compute usage can escalate quickly. Starting small and scaling with ROI helps maintain balance between innovation and budget.

Predictive planning isn't a one-off project - it's a continuous capability. Sustaining it requires process, culture, and trust.

But here's the good part - maintaining this discipline over time is what truly delivers the long-term benefits of predictive analytics.

Data changes, markets evolve - and your models should too. Schedule regular retraining cycles and set alerts to detect when performance starts to decline. You need automation - it helps keep everything stable and efficient without constant manual checks.

People won't use what they don't understand. Educating stakeholders can bring a lot of value - for example, showing how predictions are made and how to interpret them builds confidence.

Transparency turns doubt into adoption.

Data scientists understand models, while business teams understand context. So it's no surprise that the real magic happens when they collaborate. Predictive planning succeeds only when technical expertise aligns with strategic decisions.

Version control, audit trails, and proper documentation are necessary steps to build a predictive system. They ensure transparency, reproducibility, and regulatory compliance.

Look at it this way - treat your models like living assets, tracked and managed over time, and you'll be able to keep them reliable and consistent.

Encourage curiosity. Let teams test new data sources, algorithms, and "what-if" scenarios. When a culture of experimentation becomes part of daily practice, predictive planning transforms from a tool into a mindset - one that thrives on continuous learning.

Scenario planning extends this by helping organizations anticipate potential risks and opportunities, making it easier to adapt strategies before changes occur.

Predictive planning combines data, analytics, and forecasting to make decisions proactively.

It replaces static, assumption-based plans with adaptive, data-driven insights.

The key success factors are data quality, continuous learning, and collaboration between business and data teams.

A culture of experimentation and scenario planning keeps organizations prepared for future change.

Most SaaS cloud problems start with early decisions. Cloud consulting helps you scale safely, control costs, and build resilient infrastructure from day one.

Kubernetes has become a core solution for secure, high-performance scientific research and data-intensive computing. This article outlines the key reasons behind this.

A clear, straightforward look at DigitalOcean migration - why finance companies are taking a closer look at the platform and what you can expect from the move.